我从窗户里探头往外看,嘿!春天果然到来了。看,外面嫩绿的小草像动画片里的那样,慢慢探出头来。再看,那平坦的草地里,星星点点的眨着眼睛的是什么?哦!那是可爱的小花,还有小虫在花瓣里钻来钻去呢?嘻,原来是童话故事里睡在花瓣里的拇指姑娘啊!再看看,那干枯已久的柳树也伸出了嫩绿的手,轻轻地走来了美丽的春姑娘!

利用go语言的协程并发优势爬取网页速度相当之快,博客园100页新闻标题只需一秒即可全部爬取

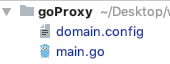

package main

import (

"bytes"

"fmt"

"github.com/PuerkitoBio/goquery"

"log"

"net/http"

"runtime"

"strconv"

"sync"

)

func Scraper(page string) string {

// Request the HTML page.

ScrapeURL := "https://news.cnblogs.com/n/page/" + page

client := &http.Client{}

reqest, _ := http.NewRequest("GET", ScrapeURL, nil)

reqest.Header.Set("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8")

reqest.Header.Set("Accept-Charset", "GBK,utf-8;q=0.7,*;q=0.3")

//reqest.Header.Set("Accept-Encoding", "gzip,deflate,sdch")

reqest.Header.Set("Accept-Language", "zh-CN,zh;q=0.8")

reqest.Header.Set("Cache-Control", "max-age=0")

reqest.Header.Set("Connection", "keep-alive")

reqest.Header.Set("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.75 Safari/537.36")

res, err := client.Do(reqest)

if err != nil {

log.Fatal(err)

}

defer res.Body.Close()

if res.StatusCode != 200 {

log.Fatalf("status code error: %d %s", res.StatusCode, res.Status)

}

// Load the HTML document

doc, err := goquery.NewDocumentFromReader(res.Body)

if err != nil {

log.Fatal(err)

}

// Find the review items

var buffer bytes.Buffer

buffer.WriteString("**********Scraped page " + page + "**********\n")

doc.Find(".content .news_entry").Each(func(i int, s *goquery.Selection) {

// For each item found, get the band and title

title := s.Find("a").Text()

url, _ := s.Find("a").Attr("href")

buffer.WriteString("Review " + strconv.Itoa(i) + ": " + title + "\nhttps://news.cnblogs.com" + url + "\n")

})

return buffer.String()

}

func main() {

runtime.GOMAXPROCS(runtime.NumCPU())

ch := make(chan string, 100)

wg := &sync.WaitGroup{}

var page string

for i := 1; i < 101; i++ {

wg.Add(1)

go func(i int) {

page = strconv.Itoa(i)

fmt.Printf("Scraping page %s...\n", page)

ch <- Scraper(page)

wg.Done()

}(i)

}

wg.Wait()

//print result

for i := 0; i < 101; i++ {

fmt.Println(<-ch)

}

}

总结

以上就是这篇文章的全部内容了,希望本文的内容对大家的学习或者工作具有一定的参考学习价值,谢谢大家对的支持。如果你想了解更多相关内容请查看下面相关链接